Ever since 2015, when I was doing a vacation in Curaçao, I had the hope to come back to the Caribbean at some point and do much more diving on those awesome reefs. So when my employer change was coming up this year, I took the chance and organized a trip to Bonaire for me and some of my buddies.

Bonaire, though also one of the Netherlands Antilles, is an altogether different “animal” then Curaçao. While Curaçao is very much frequented by tourists and divers alike, there is almost no “normal” tourism on Bonaire. Since it only has a handful of very small sand beaches, it is not very attractive on the eyes of the normal tan seeking tourists. However, due to some heavy effort of the diving legend Hans Hass, the coral reefs around Bonaire have been a protected natural reserve since 1979. Because of this, the coral reefs there have stayed quite spectacular, while reefs in Curaçao and Aruba are suffering heavily from fishing, boat traffic and tourism.

We reached Bonaire from Hamburg via Amsterdam with the Royal Dutch airline KLM. Taking 16 hours (with a 3 1/2 hour layover in Amsterdam) it was quite a daunting flight. Luckily in modern times, having a full broadband of media available during the flight, passing the time was not to hard. After all, when getting up at around 2 o’clock, sleeps comes easily even in the loud and cramped environment an airliner is. So after an additional 1 hour layover in Aruba, we touched down on Flamingo Airport Bonaire. For this day, it was already quite clear that we would not do much more then get our rental car, drive to the villa that we had rented and fell asleep rather quickly 😛 .

The next day, after having breakfast at some small motel, our first stop was the car rental (to sign some papers). Right after that, we stocked up on food and utils for the days to come (most things are quite expensive in Bonaire. The only exception is vegetables and fruits, as the come directly from Venezuela). Last but not least, we got our dive gear ready, drove to the dive base and got our cylinders for the first dive.

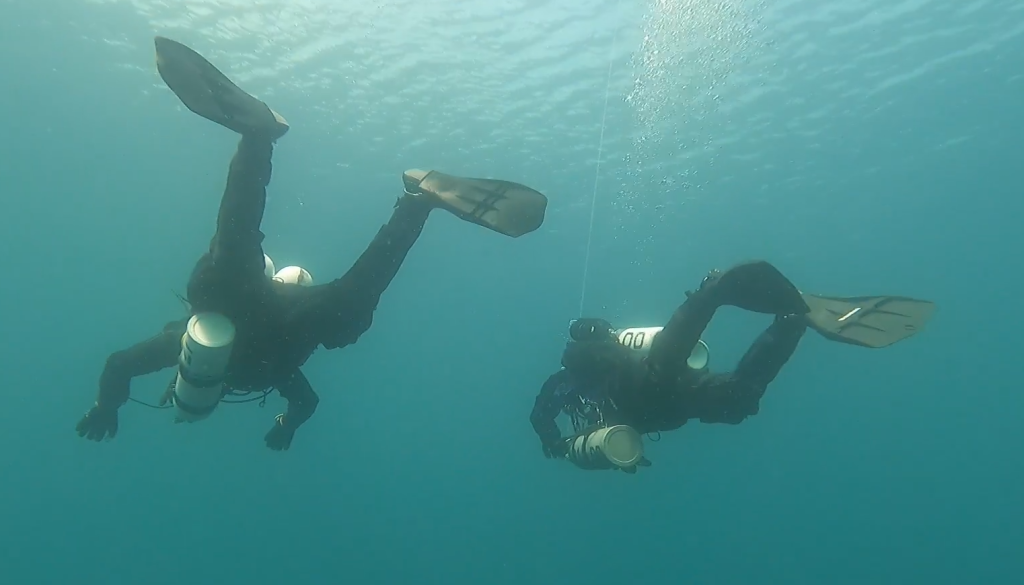

At this point it was already quite clear that we would not be able to do more than one dive that day, as we, being new on the island, had wasted to much time with different new things. Entering the water, expecting at least some refreshing from the 32°C outside, we were shocked that the water was not much cooler (31°C). Even at lower depth’s, it always stays this way (coolest temperature during the whole vacation was to be 29°C at 30m). The promise of supreme reefs and astonishing wildlife was not broken. Right at the first dive, after putting our heads under the waves, we encountered a sea turtle. And the list of Sealife just kept filling up.

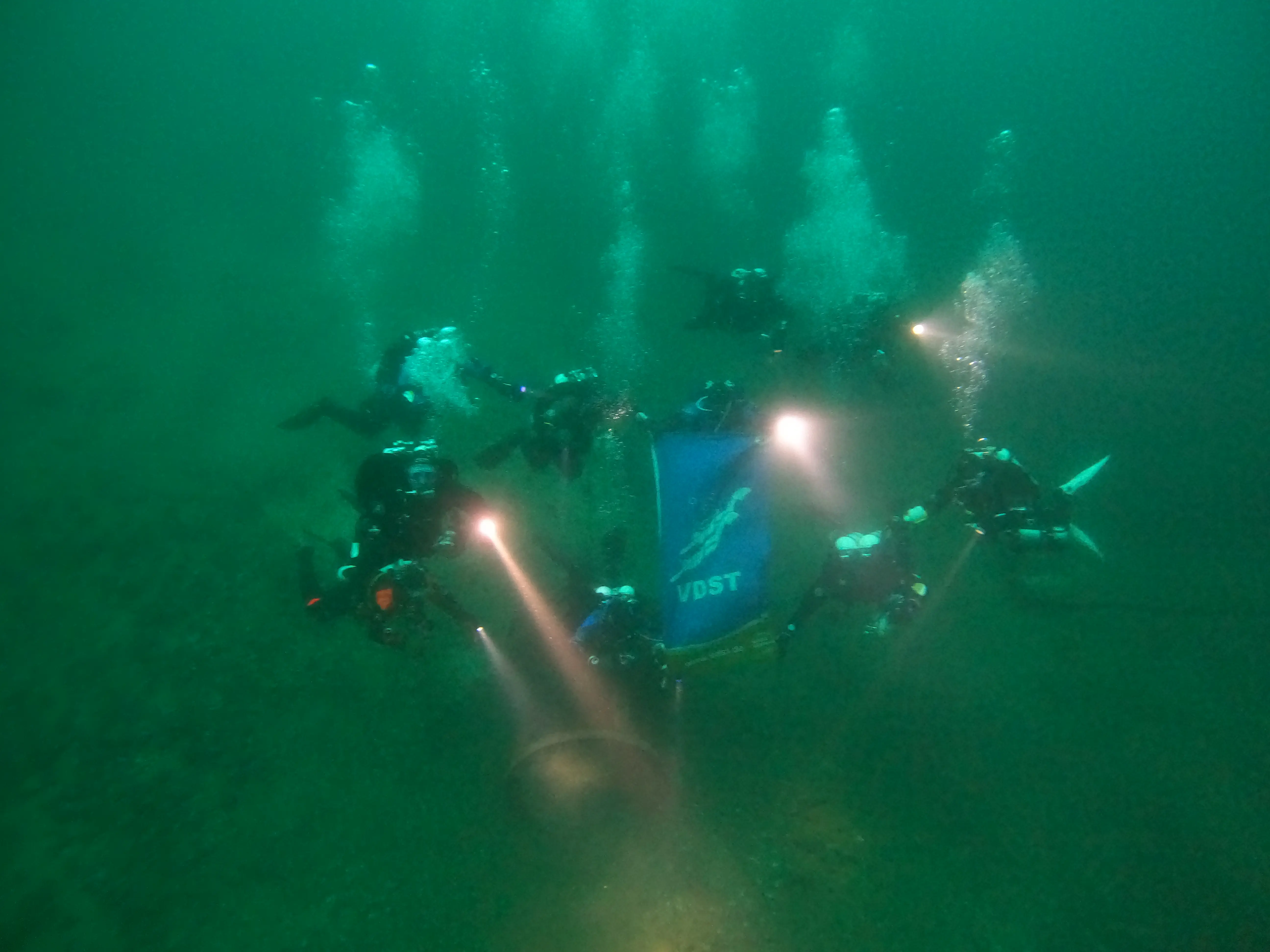

During the next days, we developed a routing of early breakfast at the villa, grabbing our cylinders at the dive base and then having two dives during the day. Every now and then we did a night dive too (being the then 3rd dive of the day). One of the special dive spots was the wreck of the famous Hilmar Hooker, where I switched from my Marco to the wide angle lens, for some extra impressive pictures.

Sometimes we adjusted the routine a bit, due to stuff happening during the day (I unfortunately developed a bad ear infection and had to pause diving for a day) and on one of the days (right in the middle of the vacation), we had a scheduled dive pause, to give our body a chance to completely desaturate at least once. That day although was filled with exploring the island over water, visiting the Washington Slagbaai national park, taking pictures of flamingos and colobris and grabbing some dinner at the famous LAC surfing area in the evening.

During the second half of the vacation, we took a trip to Klein Bonaire, where I spotted a sea horse (of course.. the only time I did not have my camera with me) during a snorkel expedition. We also managed to get a nice little sunburn, as the island is practically nothing but white sandy beach and crystal clear turquoise water.

Of course souvenirs and some stuff for ourselves should not be missing, so on one of the last days we also managed to get a bit of shopping done, buy some postcards for our friends back home and enjoy the culture of the island a bit. As our vacation was nearing its end, we intensified the diving again. That is what we were there for after all.

But all things have to end at some point, and so we found ourselves back at Flamingo airport again, hauling our luggage through the halls and into the airplane. It wasn’t easy to say goodbye to the sun and the awesome diving experiences. But now that I’ve been in the Caribbean for the second time, I was pretty sure that I’ll be back someday soon ;-).

You can find all the pictures of the dives and over water fun in bonaire in my gallery.